Nowadays it is common to have multiple Kubernetes clusters. There are several reasons why organisations might choose to have multiple clusters, including:

To isolate different environments: For example, you might have one cluster for development, one for staging, and one for production. This can help to prevent problems in one environment from affecting other environments.

To meet compliance requirements: Some organisations may have compliance requirements that dictate that certain applications must be deployed to separate clusters.

To support different regions: You can deploy applications to different clusters in different regions to improve performance and availability for users in those regions.

Importance of having a centralized location for viewing resource summaries

Managing multiple Kubernetes clusters effectively requires a centralised location for viewing a summary of resources. Here are some key reasons why this is important:

Centralised Visibility: A central location provides a unified view of resource summaries, allowing you to monitor and visualise resources from all your clusters in one place. This simplifies issue detection, trend identification, and problem troubleshooting across multiple clusters.

Efficient Troubleshooting and Issue Resolution: With a centralised resource view, you can swiftly identify the affected clusters when an issue arises, compare it with others, and narrow down potential causes. This comprehensive overview of resource states and dependencies enables efficient troubleshooting and quicker problem resolution.

Enhanced Security and Compliance: Centralised resource visibility strengthens security and compliance monitoring. It enables you to monitor cluster configurations, identify security vulnerabilities, and ensure consistent adherence to compliance standards across all clusters. You can easily track and manage access controls, network policies, and other security-related aspects from a single location.

Sveltos solution

Sveltos is an open-source project that provides a Kubernetes add-on controller that simplifies the deployment and management of add-ons across multiple Kubernetes clusters.

It can also collect and display information about resources in managed clusters from a central location.

To do this, Sveltos uses three main concepts:

ClusterHealthCheck is the CRD used to tell Sveltos from which set of clusters to collect information;

HealthCheck is the CRD used to tell Sveltos which resources to collect;

Sveltosctl is the CLI used to display collected information.

For example, you could use ClusterHealthCheck to specify that you want Sveltos to collect information from all of your Kubernetes clusters that are running in the env=prod environment. You could then use HealthCheck to specify that you want Sveltos to collect information about all of the pods, deployments, and services in those clusters. Finally, you could use Sveltosctl to display the collected information in a human-readable format.

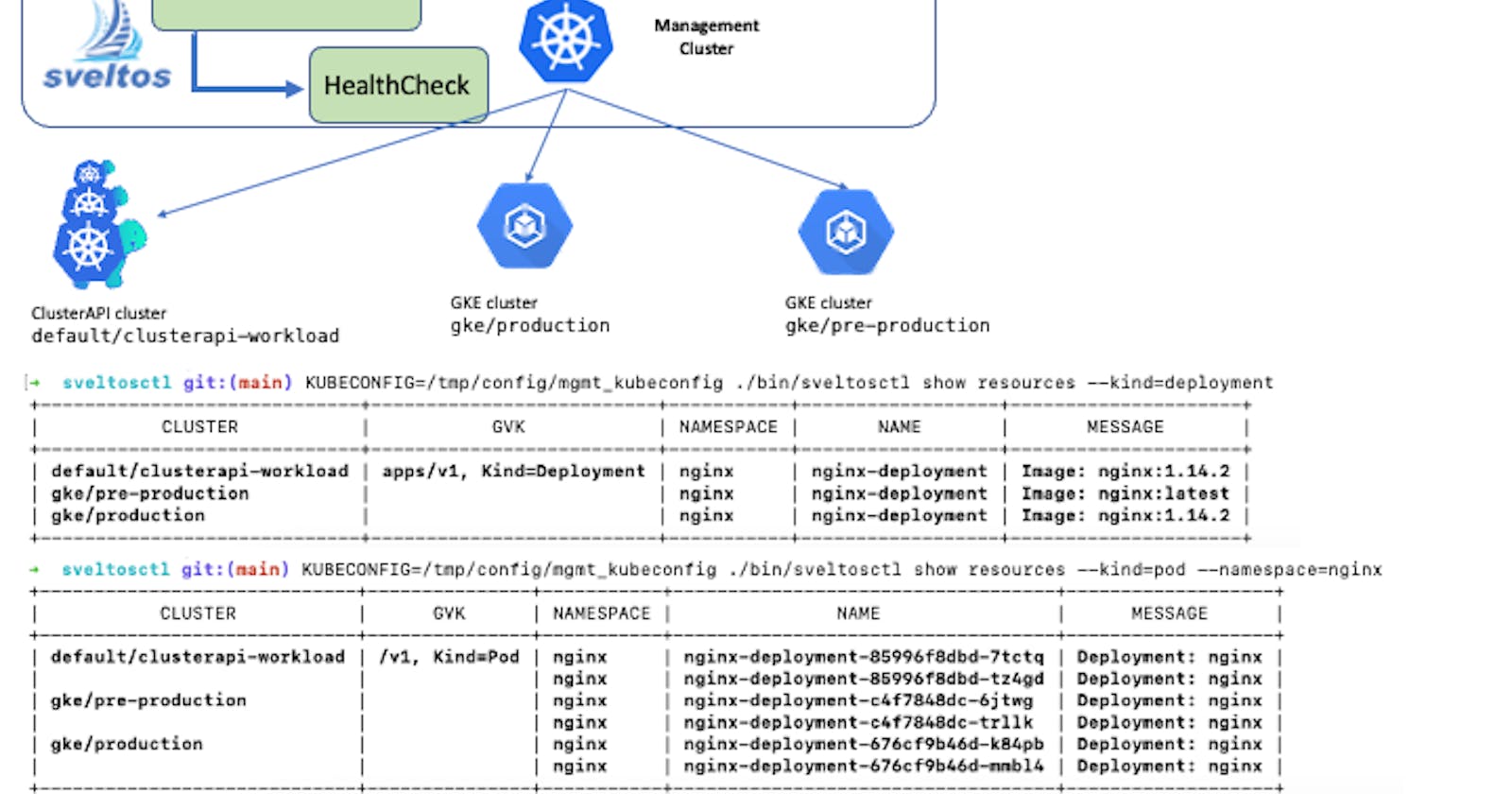

Here is an example of the output of the sveltosctl show resources command:

./sveltosctl show resources --kind=pod --namespace=nginx

+-----------------------------+---------------+-----------+-----------------------------------+-------------------+

| CLUSTER | GVK | NAMESPACE | NAME | MESSAGE |

+-----------------------------+---------------+-----------+-----------------------------------+-------------------+

| default/clusterapi-workload | /v1, Kind=Pod | nginx | nginx-deployment-85996f8dbd-7tctq | Deployment: nginx |

| | | nginx | nginx-deployment-85996f8dbd-tz4gd | Deployment: nginx |

| gke/pre-production | | nginx | nginx-deployment-c4f7848dc-6jtwg | Deployment: nginx |

| | | nginx | nginx-deployment-c4f7848dc-trllk | Deployment: nginx |

| gke/production | | nginx | nginx-deployment-676cf9b46d-k84pb | Deployment: nginx |

| | | nginx | nginx-deployment-676cf9b46d-mmbl4 | Deployment: nginx |

+-----------------------------+---------------+-----------+-----------------------------------+-------------------+

This output shows from all the managed clusters. It contains the name of the cluster, the GVK (group, version, kind) of the resource, the namespace, the name of the resource, and a message about the resource.

In the next sections, we will cover all three of these concepts in detail with concrete examples.

ClusterHealthCheck

A ClusterHealthCheck instance is a Kubernetes custom resource (CRD) that specifies which clusters Sveltos should collect information from. It has two main fields:

clusterSelector: This field is a Kubernetes label selector that specifies which clusters Sveltos should collect information from. For example, the clusterSelector field in the example provided specifies that Sveltos should collect information from all clusters that have the label env=fv.

livenessCheck: this field references an HealthCheck instance. The HealthCheck instance contains detailed instructions on which resources to collect.

apiVersion: lib.projectsveltos.io/v1alpha1

kind: ClusterHealthCheck

metadata:

name: production

spec:

clusterSelector: env=fv

livenessChecks:

- name: deployment

type: HealthCheck

livenessSourceRef:

kind: HealthCheck

apiVersion: lib.projectsveltos.io/v1alpha1

name: deployment-replicas

notifications:

- name: event

type: KubernetesEvent

When a ClusterHealthCheck instance is created in the management cluster, Sveltos will collect the information specified in the referenced HealthCheck in all managed clusters matching clusterSelector.

HealthCheck

A HealthCheck instance is a Kubernetes custom resource (CRD) that specifies which resources Sveltos should collect.

It has the following fields in its Spec section:

Group: This field specifies the group of the Kubernetes resources that HealthCheck is for.

Version: This field specifies the version of the Kubernetes resources that the HealthCheck is for.

Kind: This field specifies the kind of Kubernetes resources that the HealthCheck is for.

Namespace: This field can be used to filter resources by namespace.

LabelFilters: This field can be used to filter resources by labels.

Script: This field can contain a Lua script, which defines a custom health check. The script must contain an evaluate method which is passed an instance of resource collected (obj) and must return a struct (hs).

apiVersion: lib.projectsveltos.io/v1alpha1

kind: HealthCheck

metadata:

name: deployment-replicas

spec:

group: "apps"

version: v1

kind: Deployment

script: |

function evaluate()

hs = {}

hs.status = "Progressing"

hs.message = ""

if obj.spec.replicas == 0 then

hs.ignore=true

return hs

end

if obj.status ~= nil then

if obj.status.availableReplicas ~= nil then

if obj.status.availableReplicas == obj.spec.replicas then

hs.status = "Healthy"

hs.message = "All replicas " .. obj.spec.replicas .. " are healthy"

else

hs.status = "Progressing"

hs.message = "expected replicas: " .. obj.spec.replicas .. " available: " .. obj.status.availableReplicas

end

end

if obj.status.unavailableReplicas ~= nil then

hs.status = "Degraded"

hs.message = "deployments have unavailable replicas"

end

end

return hs

end

In above examples, deployments in all namespaces are collected. Deployments with requested replicas set to zero are ignored. Any other deployment is collected and the message indicates whether all requested replicas are active or not.

This is another example of HealthCheck collecting the pod-to-deployment relationships.

apiVersion: lib.projectsveltos.io/v1alpha1

kind: HealthCheck

metadata:

name: pod-in-deployment

spec:

group: ""

version: v1

kind: Pod

script: |

function setContains(set, key)

return set[key] ~= nil

end

function evaluate()

hs = {}

hs.status = "Healthy"

hs.message = ""

hs.ignore = true

if obj.metadata.labels ~= nil then

if setContains(obj.metadata.labels, "app") then

if obj.status.phase == "Running" then

hs.ignore = false

hs.message = "Deployment: " .. obj.metadata.labels["app"]

end

end

end

return hs

end

Sveltoctl

Sveltosctl is Sveltos’ CLI. sveltosctl show resources command displays collected information.

Here are some examples:

./sveltosctl show resources --kind=deployment

+-----------------------------+--------------------------+----------------+-----------------------------------------+----------------------------+

| CLUSTER | GVK | NAMESPACE | NAME | MESSAGE |

+-----------------------------+--------------------------+----------------+-----------------------------------------+----------------------------+

| default/clusterapi-workload | apps/v1, Kind=Deployment | kube-system | calico-kube-controllers | All replicas 1 are healthy |

| | | kube-system | coredns | All replicas 2 are healthy |

| | | kyverno | kyverno-admission-controller | All replicas 1 are healthy |

| | | kyverno | kyverno-background-controller | All replicas 1 are healthy |

| | | kyverno | kyverno-cleanup-controller | All replicas 1 are healthy |

| | | kyverno | kyverno-reports-controller | All replicas 1 are healthy |

| | | projectsveltos | sveltos-agent-manager | All replicas 1 are healthy |

| gke/pre-production | | gke-gmp-system | gmp-operator | All replicas 1 are healthy |

| | | gke-gmp-system | rule-evaluator | All replicas 1 are healthy |

| | | kube-system | antrea-controller-horizontal-autoscaler | All replicas 1 are healthy |

| | | kube-system | egress-nat-controller | All replicas 1 are healthy |

...

+-----------------------------+--------------------------+----------------+-----------------------------------------+----------------------------

./sveltosctl show resources

+-----------------------------+--------------------------+-----------+------------------+---------------------+

| CLUSTER | GVK | NAMESPACE | NAME | MESSAGE |

+-----------------------------+--------------------------+-----------+------------------+---------------------+

| default/clusterapi-workload | apps/v1, Kind=Deployment | nginx | nginx-deployment | Image: nginx:1.14.2 |

| gke/pre-production | | nginx | nginx-deployment | Image: nginx:latest |

| gke/production | | nginx | nginx-deployment | Image: nginx:1.14.2 |

+-----------------------------+--------------------------+-----------+------------------+---------------------+

Here are the available options to filter what show resources will display:

--group=<group>: Show Kubernetes resources deployed in clusters matching this group. If not specified, all groups are considered.

--kind=<kind>: Show Kubernetes resources deployed in clusters matching this Kind. If not specified, all kinds are considered.

--namespace=<namespace>: Show Kubernetes resources in this namespace. If not specified, all namespaces are considered.

--cluster-namespace=<name>: Show Kubernetes resources in clusters in this namespace. If not specified, all namespaces are considered.

--cluster=<name>: Show Kubernetes resources in the cluster with the specified name. If not specified, all cluster names are considered.

Audits

This feature can also be used for auditing purposes. For example, if we have Kyverno in each managed cluster with a Kyverno audit policy that reports each Pod that is using an image with the latest tag, we can then instruct Sveltos to collect the audit results from all managed clusters using the following HealthCheck instance:

apiVersion: lib.projectsveltos.io/v1alpha1

kind: HealthCheck

metadata:

name: deployment-replicas

spec:

collectResources: true

group: wgpolicyk8s.io

version: v1alpha2

kind: PolicyReport

script: |

function evaluate()

hs = {}

hs.status = "Healthy"

hs.message = ""

for i, result in ipairs(obj.results) do

if result.result == "fail" then

hs.status = "Degraded"

for j, r in ipairs(result.resources) do

hs.message = hs.message .. " " .. r.namespace .. "/" .. r.name

end

end

end

if hs.status == "Healthy" then

hs.ignore = true

end

return hs

end

Once the HealthCheck instance is created, we can use the sveltosctl show resources command to see consolidated results from all managed clusters:

/sveltosctl show resources

+-------------------------------------+--------------------------------+-----------+--------------------------+-----------------------------------------+

| CLUSTER | GVK | NAMESPACE | NAME | MESSAGE |

+-------------------------------------+--------------------------------+-----------+--------------------------+-----------------------------------------+

| default/sveltos-management-workload | wgpolicyk8s.io/v1alpha2, | nginx | cpol-disallow-latest-tag | nginx/nginx-deployment |

| | Kind=PolicyReport | | | nginx/nginx-deployment-6b7f675859 |

| | | | | nginx/nginx-deployment-6b7f675859-fp6tm |

| | | | | nginx/nginx-deployment-6b7f675859-kkft8 |

+-------------------------------------+--------------------------------+-----------+--------------------------+-----------------------------------------+

👏 Support this project

If you enjoyed this article, please check out the GitHub repo for the project. You can also star 🌟 the project if you found it helpful.

The GitHub repo is a great resource for getting started with the project. It contains the code, documentation, and examples. You can also find the latest news and updates on the project on the GitHub repo.

Thank you for reading!